Research

Our research focuses on digital media, interactive design, and creative visualisation. The research directions include: Virtual, Augmented and Mixed Reality; Human-Computer Interaction; Image Analysis; Computer Graphics; Computer Vision. In particular, we exploit the latest techniques of Artificial Intelligence and design thinking approaches for the research projects

Our works have been accepted and published in prestigious conferences and top-tier journals, mainly in : Prestigeous conference:

- IEEE ISMAR, IEEE VR; ACM CHI, ACM SIGGRAPH, ACM SIGGRAPH Asia; ACM CHI;

Prestigeous journal:

- ACM TOG, ACM TOCHI; IEEE TVCG;

We have been actively participating into the research community events, and serving as steering committee members, conference chairs and program committee members in conferences, such as:

- IEEE ISMAR, IEEE VR; ACM SIGGRAPH Asia; ACM VRST, ACM CHI

Areas of Research Projects

- Augmented, Virtual and Mixed Reality

- Computational and Interaction Design

- Digital Arts and Cultural Heritage

- Multimodal Human-Computer Interaction

- Visualisaiton and Analytics

Representative Research Projects:

Leveraging the Crossing-based Interaction Paradigm for Interface Design

Crossing has arguably become an important paradigm in human-computer interaction such as mouse input, stylus input, direct finger touch and remote display interaction. Yet its interactive potentials have not been fully explored. Hence, we have conducted a series of studies to leverage the expressiveness of crossing for user interface design. First, we introduced the use of crossing-based selection in virtual reality and investigated its performance in comparison to pointing-based selection. We also applied the crossing paradigm to moving target selection, and proposed a model to quantify the endpoint uncertainty in crossing-based moving target selection. In addition, we looked into distractor effects on crossing-based interaction.

Huawei TU

Gesture Input for Mobile Interaction Design

Gesture interaction is a popular style on mobile devices. Such popularity has increasingly raised many research questions in discussions of gesture interaction such as gesture learning, modelling, recognition and design. This project focuses on gesture design for mobile interaction from three aspects, i.e. input modalities, physical activities and holding postures. This project investigates the effects of these aspects on gesture input for mobile interaction design. The purpose of this project is to design more efficient gesture-driven interfaces for mobile interaction.

Huawei TU

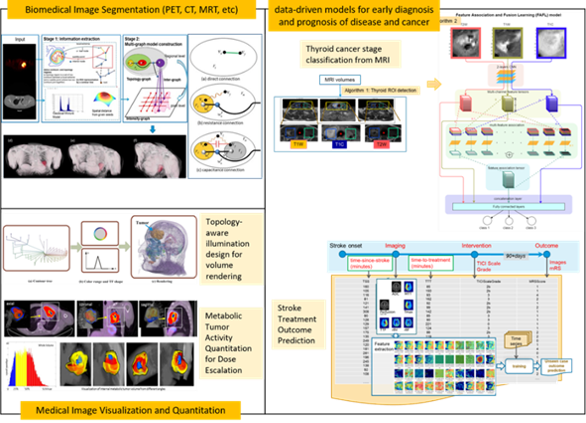

Biomedical computer vision for precision oncology

Detection and segmentation of lesions in medical images such as CT are routinely performed in radiology centres for patient diagnosis and treatment planning. This process is time-consuming and prone to inter- and intra-observer variations. Computerized methods have been developed to assist precision diagnosis and treatment planning efficiently but there is still much scope of improvement especially when there are limited amount of data or annotations for training. In this project, we investigate advanced machine learning including semi-supervised or weakly supervised algorithms for semantic segmentation of regions of interests from CT and MRI images.

Hui (Lydia) CUI

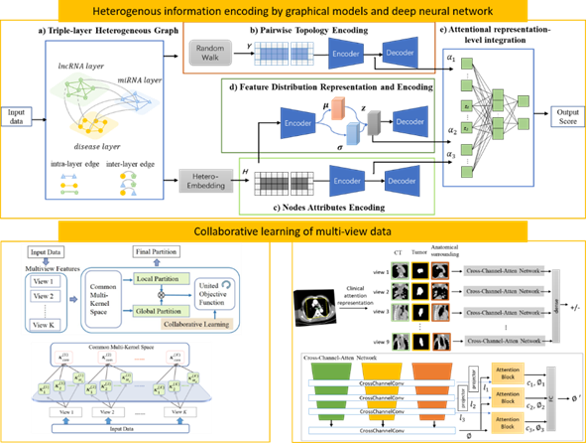

Multi-modal multi-domain data fusion and collaborative learning

Huge amount of imaging data and clinical testing and assessment are the basis for precision biomedicine. This project aims to analyze these multi-modal multi-domain data with collaborative learning and visual analytics techniques, and transform these analyses to our understanding of human health and disease pattern for transparent decision making and prognostic medicine.

Hui (Lydia) CUI

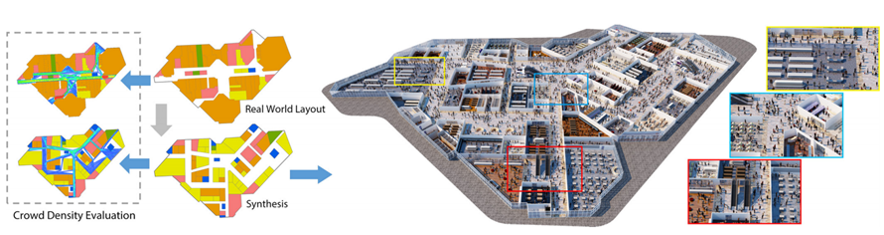

Computational Design of Mid-scale Layout using Data-driven Optimization

Given an input layout domain such as the boundary of a shopping mall, the proposed approach synthesizes the paths and sites by optimizing three metrics that measure crowd flow properties:mobility, accessibility, and coziness. While these metrics are straightforward to evaluate by a full agent-based crowd simulation, optimizing a layout usually requires hundreds of evaluations, which would require a long time to compute even using the latest crowd simulation techniques. To overcome this challenge, we propose a novel data-driven approach where nonlinear regressors are trained to capture the relationship between the agent-based metrics, and the geometrical and topological features of a layout. The proposed approach synthesizes crowd-aware layouts and improve existing layouts with better crowd flow properties.

Tian (Frank) FENG

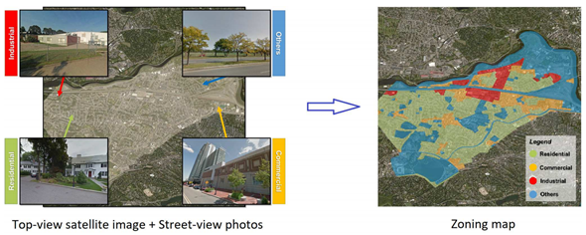

Urban Zoning Classification from Heterogenous Imagery Data using Artificial Intelligence

Urban zoning enables various applications in land use analysis and urban planning. The proposed approach focuses on automatic urban zoning using higher-order Markov random fields (HO-MRF) built on multi-view imagery data including street-view photos and top-view satellite images. In the proposed HO-MRF, top-view satellite data is segmented via a multi-scale deep convolutional neural network (MS-CNN) and used in lower order potentials. Street-view data with geo-tagged information is augmented in higher-order potentials.

Tian (Frank) FENG

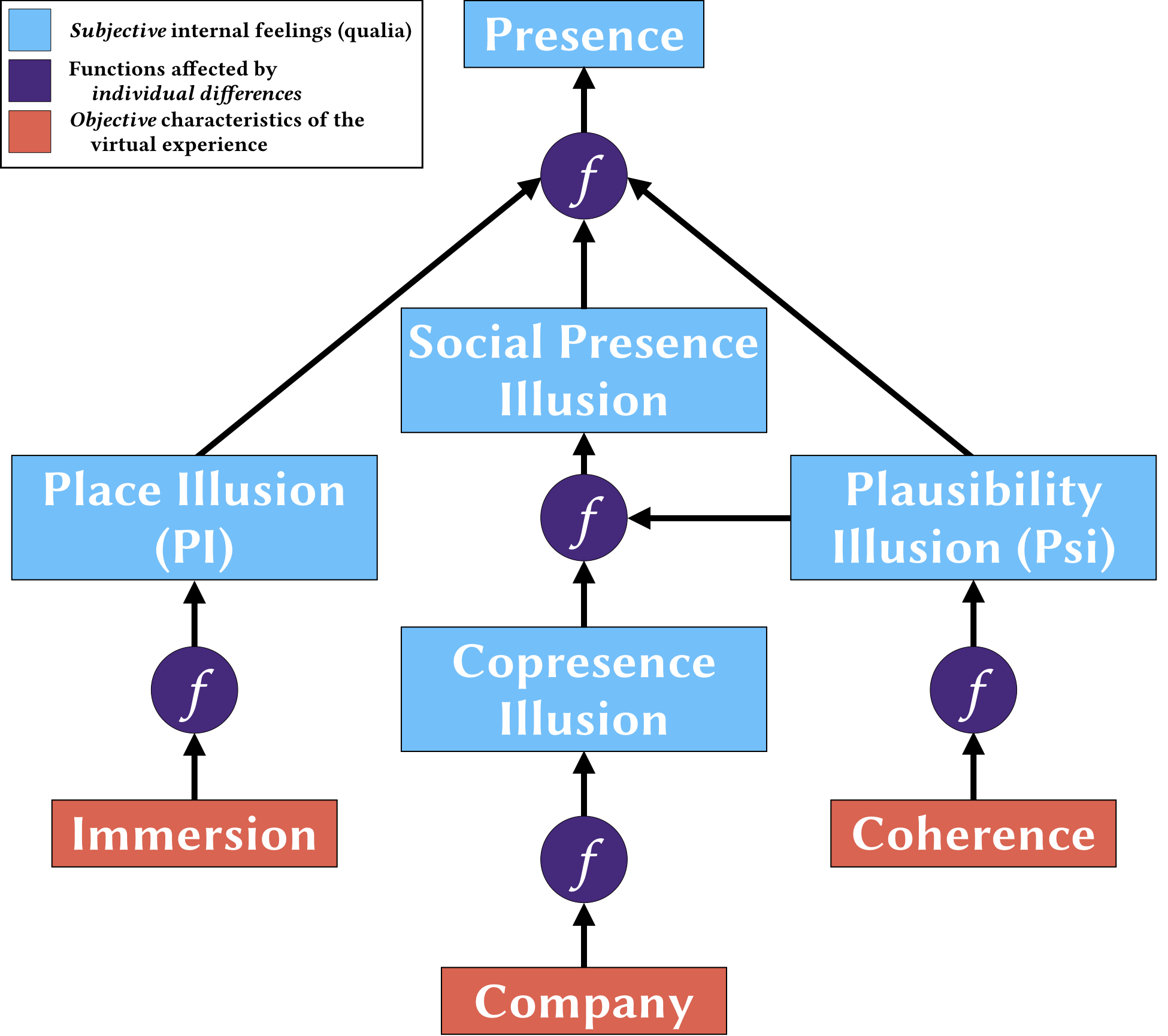

Cognitive Illusions in Virtual Environments

In a 2020 paper, my colleagues and I presented a research agenda regarding five key constructs that are part of how users experience virtual environments:immersion, coherence, Place Illusion, Plausibility Illusion, and presence. It is believed that immersion (the technical qualities of a system) and coherence (the degree to which the experience matches up with user expectations) lead to Place Illusion (the user’s feeling that they are in another place) and Plausibility Illusion (the feeling that the events a user is seeing are actually happening), and that these two feelings give rise to the sensation of presence. This project seeks to generate knowledge about these constructs and the relationships between them.

Richard Skarbez

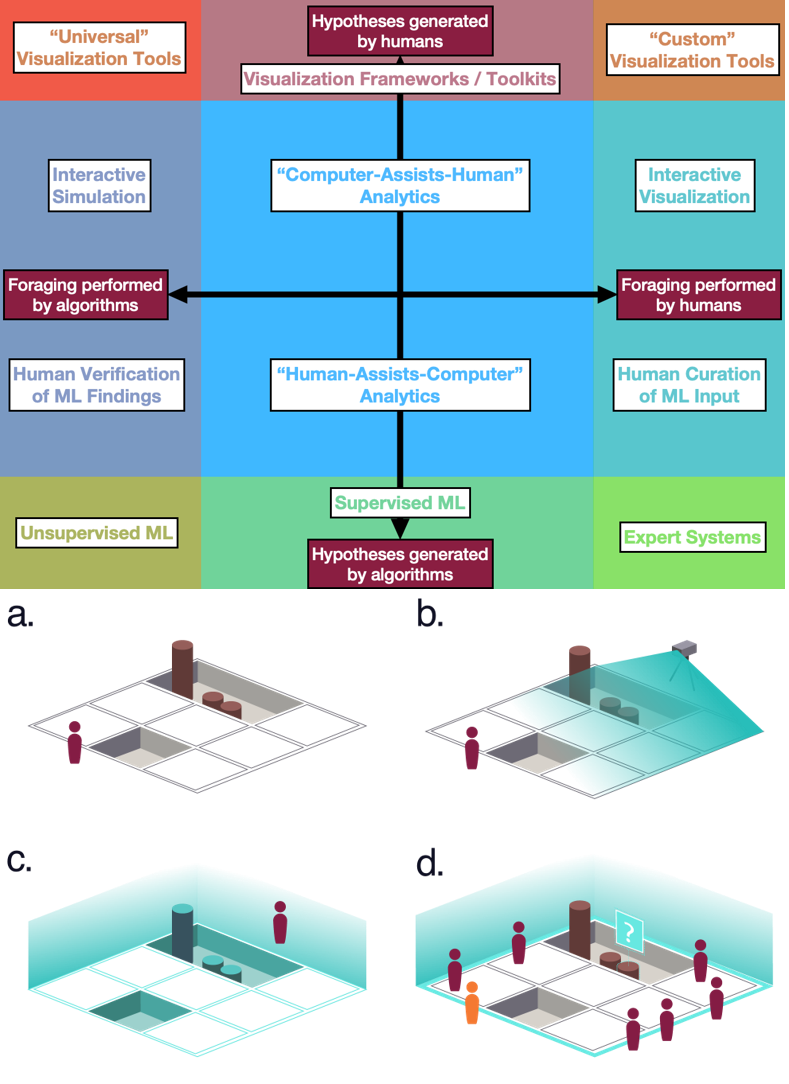

Immersive Analytics, particularly Immersive Archaeology

Advances in a variety of computing fields, including “big data”, machine learning, visualization, and augmented/mixed/virtual reality, have combined to give rise to the emerging field of immersive analytics, which investigates how these new technologies support analysis and decision making. In a 2019 paper, my colleagues and I identified some of what we consider to be the most important research questions facing immersive analytics. This project seeks to investigate these questions.

I have a particular interest in the application of immersive analytics to the field of archaeology. Archaeologists collect rich and complex spatiotemporal data in the course of their fieldwork. This data, however, frequently lies unused after it is collected because it is difficult to analyze, and the archaeology domain experts frequently lack the time and computational expertise needed to extract maximum value from their data. This project seeks to develop and evaluate tools to enable new archaeological research.

Richard Skarbez

Human Perception of and in Immersive Environments

There are high hopes that immersive technology (augmented, mixed, and virtual reality) will enable new possibilities in training, education, telepresence, and many other fields. However, for this to be the case, we need to know that users perceive these environments accurately. Such technologies also have the potential to transform the study of human perception more generally, as they offer the ability to generate experimental stimuli that are both highly controllable and ecologically valid. This project seeks to generate quantitative data and theoretical models for how humans perceive stimuli presented by immersive technologies.

Richard Skarbez

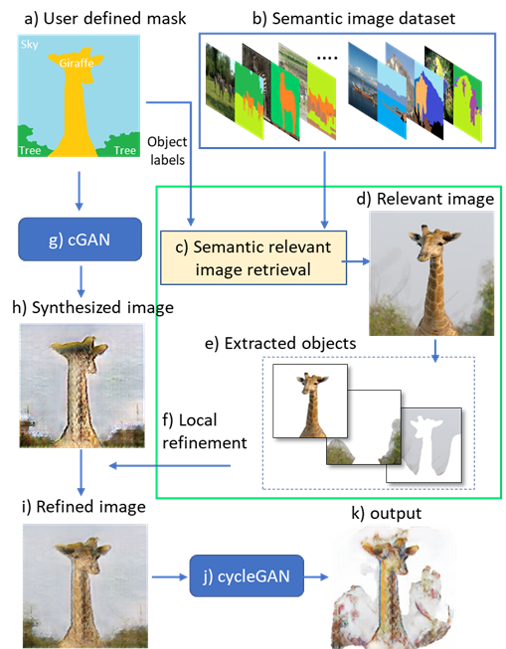

User-Guided Generative Adversarial Networks for Cultural Heritage

Automated image style transfer is of great interest given the recent advances in generative adversarial networks (GANs). However, it is challenging to generate synthesized images from abstract masks while preserving detailed patterns for certain kinds of art given small datasets. This project aims to develop an GAN-based system enhanced with user intent and prior knowledge for generating images styled as Cantonese porcelain using user-defined masks.

Szu-Chi (Steven) Chen

The prediction of virtual reality simulator sickness

Virtual reality (VR) has been applied widely recently. However, users suffer visual discomfort feelings from it. virtual reality simulator sickness is still an unsolved problem in the application of VR. This project aims to design an effective neural network for VR simulator sickness prediction work in an objective method.

Minghan Du

Collaborators (countries and regions)

Australia

- Professor Andy Herries, Archaeology, La Trobe University, Australia

- Professor Mark Billinghurst, Computer Science, University of Auckland and University of South Australia

- Associate Professor Xiuying Wang, Computer Science, University of Sydney, Australia

- Associate Professor Tomasz Bednarz, Art & Design, University of New South Wales, Australia

- Dr Philippe Chouinard, La Trobe University, Australia

China

- Professor Feng Tian, Institute of Software, Chinese Academy of Sciences, China

- Professor Yingqing Xu, Art & Design, Tsinghua University, China

- Professor Yue Liu, School of Optics and Photonics, Beijing Institute of Technology, China

- Professor Yongtian Wang, School of Optics and Photonics, Beijing Institute of Technology, China

- Professor Dongdong Weng, School of Optics and Photonics, Beijing Institute of Technology, China

- Associate Professor Sai-Kit Yeung, Computer Science, Hong Kong University of Science and Technology

- Associate Professor Ji Yi, Art & Design, Guangdong University of Technology, China

Japan

- Professor Xiangshi Ren, Computer Science, Kochi University of Technology, Japan

USA

- Associate Professor Joe Gabbard, Computer Science, Virginia Tech, USA

- Associate Professor Lap-Fai Yu, Computer Science, George Mason University, USA

- Dr Shumin Zhai, Google, USA

Sponsors and supporters